- Print

- DarkLight

- PDF

ViewLift Sitemaps & Robots.txt

About Sitemaps

A sitemap is a file that lists the URLs of all the pages on your website. It helps search engines like Google, Bing, and Yahoo crawl your website more effectively and efficiently. This is because search engines use sitemaps to understand the structure of your website and to determine which pages are the most important.

ViewLift uses an XML-type sitemap generator. This means that the sitemap file is formatted in XML, which is a language that search engines can easily understand.

Tools platform by default supports the following types of sitemaps:

- Page Sitemap: This is the common type of sitemap XML file for the sites. It should display all the pages on your site. :

https://www.example.tv/page-sitemap.xml - Video Sitemap: Sitemap that must include all pages of your website.

- Shows (Series) Sitemap: Sitemap that must include URLs of all your series/shows content.

ViewLift's sitemaps fetch the XML and related data for every link to have an optimized experience for crawlers.

In addition to the above default three sitemaps, Tools can create custom sitemaps if necessary. We support only pre-defined fixed set of sitemap names on the website. i.e sitemap1.xml, sitemap2.xml........, sitemap10.xml

For information on how to build and submit a sitemap, reference this Google article.

About Robots.txt file

Google and Bing will crawl your website and index the pages that are linked to other websites. They will also index the pages that are listed in your sitemap. If you have a robots.txt file, it will tell the search engines which pages they are not allowed to crawl.

A Robots.txt file has a block of 'directives' for bots to tell which pages of your site are allowed to crawl and index and which pages shouldn't surface in the search results. Use the Disallow directive if you want to stop indexing specific content. For example, you can add pages that are under construction, user account pages, and localized pages to the robots.txt file as illustrated below. This robots.txt file will tell Google not to crawl any localized pages of /bn/ code, which is the language code for Bengali language, and specific pages such as /food-recipe/, /all-hindi/, etc. Google/Bing will not index those pages in search results.

.png) A sample Robots.txt file

A sample Robots.txt file

Once you're happy, you can replace the file in the root directory of your website host and submit it to Google and Bing.

How to update the Robots.txt file

- Go to the client's S3 bucket: appcms-config-prod

- Got to your site folder: <site folder is your siteId> Example: 6s231280-p30d-67rt-f67c-970l45e5w7c7

- Go to the static folder if the robots.txt file exists. Download the file, update it, and re-upload it at: s3://appcms-config-prod/6s231280-p30d-67rt-f67c-970l45e5w7c7/static/robots.txt

- If the file doesn't exist, create a robots.txt file on your computer with the entries and upload it.

Alternatively, you can provide us with the latest file and we will reupload it to the root directory.

How to optimize your sitemaps for organic rankings

There are a few things you can do to optimize your sitemap for search engines. You can include the following information in your sitemap.

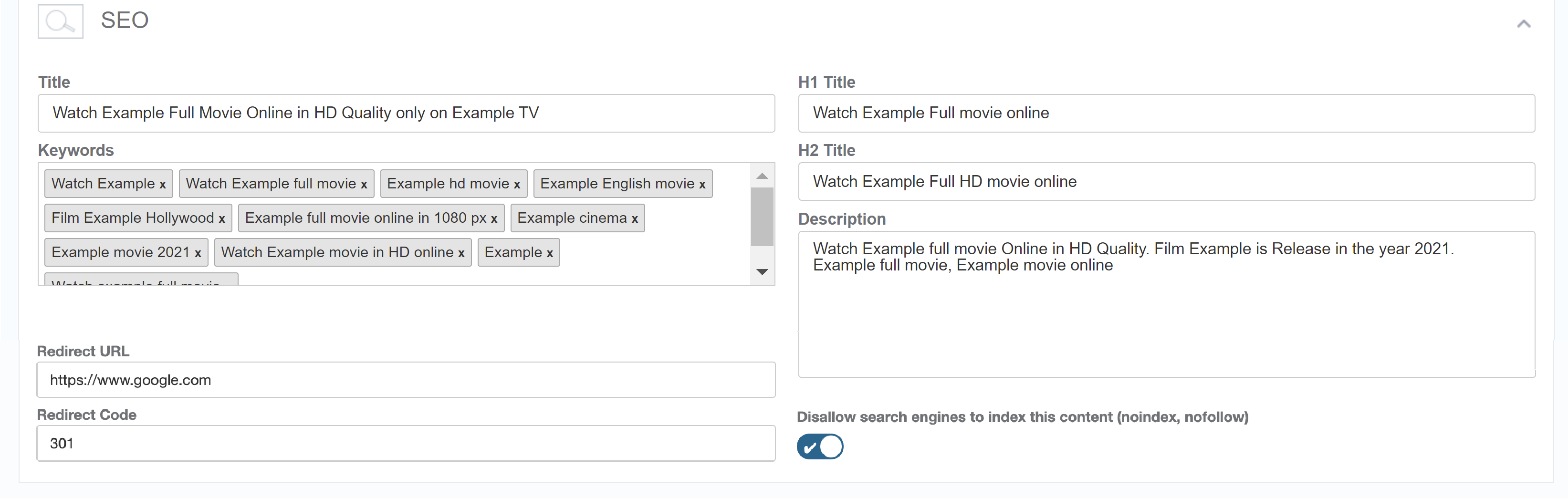

- Implement 301 Redirects and mark pages as "No-Index"

These are very important SEO features to avoid 404 errors on your site.

- Implement 301 Redirects: If you plan to retire certain pages, set up 301 redirect rules to redirect users and search engines to relevant and active pages. Use specific redirect codes to indicate the permanent move of content.

- Mark Pages as "No-Index": For content that you want to exclude from search engine indexing, activate the "no-index" toggle as shown below. This helps prevent search engines from indexing and displaying those pages in search results.

- Google has put a character limit of 2048 characters for meta description content failing which can result in indexing issues for your video pages. Do not exceed the recommended length for description.

- Region-Specific Sitemaps

To further prevent any 404 errors originating from sitemaps, the ViewLift Tools platform provides region-specific sitemap XML. The content included in the sitemap will be tailored to the specific region or country from which the request originated. This means that depending on the user's location, the sitemap will feature content that is licensed and relevant to their specific region.NoteIf soft 404 still persists, consider disabling the firewall temporarily for the pages that cause the error. - Reduced initial HTML size: We have now reduced the total size of the initial HTML from the server to around 16 KB.

- Check for empty spaces in content permalinks as this can cause pages to break. Avoid using special characters, such as double quotes.

- Use different sitemaps for different types of content. For example, you could create a separate sitemap for images, videos, and blog posts.

- Enabled HSTS: ViewLift's Tools platform has HSTS (Strictly Https) enabled for our clients. This avoids getting flagged by SEO crawlers for assets uploaded via HTTP instead of the secure HTTPS protocol.

- Internal links are links from one page on your website to another. They help search engines understand the structure of your website and the relationships between your pages. If you don't have any internal links to your pages, it may be difficult for search engines to find and index them.

Submit/resubmit your sitemaps

Once you generate your sitemap from ViewLift, you should submit it to Google Search Console and Bing Webmaster Tools. You can find instructions on how to do this here.

To re/submit sitemaps in GSC

- Go to the Search Console for your website.

- Click Sitemaps > Add a sitemap.

- Enter the URL of your new sitemap and click the Submit button.

- Once your sitemap has been submitted, you can view the status of your sitemap in the Sitemaps tab.

To learn more, see this Google article.

While in Bing Webmaster Tools, you can use the Crawl Control feature on Bing Webmaster Tools, where you can configure hourly crawl control based on the peak usage time for your site and obtain the best results for your site.

Create/update ads.txt file

To update the ads.txt file, go to the S3 bucket: appcms-config-prod, then go to your site folder. Look for your site ID, which is an alphanumeric string, for example, 165508g3-6deb-78bc-8l90-f31304589f70. Go to the static folder and see if the ads.txt file exists.

Download the file and update it by adding the new entries at the bottom, save and then re-upload it to your site folder: s3://appcms-config-prod/165508g3-6deb-78bc-8l90-f31304589f70/static/ads.txt

If the file doesn't exist, create an ads.txt file locally with the records and upload it.

FAQs

Q. We conducted a site audit for our website. The audit indicates there are incorrect pages listed in the sitemaps.

Unpublished and test pages should not appear in the sitemaps. For example, we disallow crawling of your Dev, QA and UAT environments in your robots.txt file. You can check the robots.txt file by visiting the web url for example, qa.yourservicename.com/robots.txt.

Googlebot crawlers are able to handle geo-blocked URLs in sitemaps, and ViewLift does not need to take any additional action. For example, if you have enabled geo-restrictions for the USA, your server will block a Googlebot that appears to be coming from the USA, but it will allow access to a Googlebot that appears to come from Australia. This is because Googlebot crawlers use a variety of IP addresses from around the world. See this Google article for more information.

Q. Slow indexing experience.

Google may take days or upto a week to index new URLs submitted through the search console. Consider submitting manually using Google Console (Webmaster).

If you are seeing duplicate content, missing internal links, apostrophes in video permalinks or titles, or other errors in your sitemap, you should fix these issues before submitting your sitemap to Google. This will help to ensure that Google is able to properly crawl and index your website. If you have fixed all of the errors in your sitemap and you are still having trouble getting Google to index your new URLs, you can try requesting a re-index. However, it is important to note that Google is not obligated to re-index your website, and it may take some time for your request to be processed.

Q. If my website supports two languages, should I submit two versions of sitemaps?

Yes, you must create a sitemap for each language version of your website. You can use the URL Inspection Tool in Google Search Console to test your sitemap. When you submit a sitemap to Google, check for the following information:

- The URL of the sitemap

- The language of the sitemap

- The date the sitemap was last updated

Google will then crawl the sitemap and index the content in the correct language. You can use the Google Sitemap Test Tool to check your sitemaps for errors and to ensure that the hreflang tags are implemented correctly.

Q. Crawl blockage and pages are missing on certain sitemaps.

Your video sitemap may have contained over 3000+ video pages, but the page count shows a mere 33 now as illustrated in an example above.

Tips for submitting multiple sitemaps

- Use the same URL structure for all of your sitemaps.

- Use a unique identifier for each sitemap. This will help you track the performance of each sitemap.

- Submit the sitemaps to search engines regularly. This will help Google/Bing search keep your website's content up-to-date.

.png)